我的安裝版本是spark⑴.6.1-bin-hadoop2.6.tgz 這個版本必須要求jdk1.7或1.7以上

安裝spark必須要scala⑵.11 版本支持 我安裝的是scala⑵.11.8.tgz

tg@master:/software$ tar -zxvf scala⑵.11.8.tgz

tg@master:/software/scala⑵.11.8$ ls

bin doc lib man

添加環(huán)境變量

tg@master:/$ sudo /etc/profile

加入

export SCALA_HOME=/software/scala⑵.11.8

export PATH=$SCALA_HOME/bin:$PATH

tg@master:/$ source /etc/profile

啟動scala

tg@master:/$ scala

Welcome to Scala 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_80).

Type in expressions for evaluation. Or try :help.

scala> 9*9

res0: Int = 81

安裝Spark

----------------

tg@master:~$ cp ~/Desktop/spark⑴.6.1-bin-hadoop2.6.tgz /software/

tg@master:~$ cd /software/

tg@master:/software$ ls

apache-hive⑵.0.0-bin jdk⑺u80-linux-x64.tar.gz

apache-hive⑵.0.0-bin.tar.gz scala⑵.11.8

hadoop⑵.6.4 scala⑵.11.8.tgz

hadoop⑵.6.4.tar.gz spark⑴.6.1-bin-hadoop2.6.tgz

hbase⑴.2.1 zookeeper⑶.4.8

hbase⑴.2.1-bin.tar.gz zookeeper⑶.4.8.tar.gz

jdk1.7.0_80

tg@master:/software$ tar -zxvf spark⑴.6.1-bin-hadoop2.6.tgz

添加環(huán)境變量

sudo gedit /etc/profile

export SPARK_HOME=/software/spark⑴.6.1-bin-hadoop2.6

export PATH=$SPARK_HOME/bin:$PATH

source /etc/profile

修改spark-env.sh

tg@master:~$ cd /software/spark⑴.6.1-bin-hadoop2.6/conf/

tg@master:/software/spark⑴.6.1-bin-hadoop2.6/conf$ ls

docker.properties.template metrics.properties.template spark-env.sh.template

fairscheduler.xml.template slaves.template

log4j.properties.template spark-defaults.conf.template

tg@master:/software/spark⑴.6.1-bin-hadoop2.6/conf$ cp spark-env.sh.template spark-env.sh

tg@master:/software/spark⑴.6.1-bin-hadoop2.6/conf$ sudo gedit spark-env.sh

加入

export SCALA_HOME=/software/scala⑵.11.8

export JAVA_HOME=/software/jdk1.7.0_80

export SPARK_MASTER_IP=192.168.52.140

export SPARK_WORKER_MEMORY=512m

export master=spark://192.168.52.140:7070

修改slaves

tg@master:/software/spark⑴.6.1-bin-hadoop2.6/conf$ cp slaves.template slaves

tg@master:/software/spark⑴.6.1-bin-hadoop2.6/conf$ sudo gedit slaves

master

啟動

tg@master:/software/spark⑴.6.1-bin-hadoop2.6$ sbin/start-all.sh

starting org.apache.spark.deploy.master.Master, logging to /software/spark⑴.6.1-bin-hadoop2.6/logs/spark-tg-org.apache.spark.deploy.master.Master⑴-master.out

master: starting org.apache.spark.deploy.worker.Worker, logging to /software/spark⑴.6.1-bin-hadoop2.6/logs/spark-tg-org.apache.spark.deploy.worker.Worker⑴-master.out

jps查看進程 多了Worker,Master

tg@master:/software/hbase⑴.2.1/conf$ jps

4400 HRegionServer

3033 DataNode

5794 Jps

4793 Main

3467 ResourceManager

5652 SparkSubmit

5478 Master

3591 NodeManager

3240 SecondaryNameNode

3910 QuorumPeerMain

2911 NameNode

5567 Worker

4246 HMaster

tg@master:/software/hbase⑴.2.1/conf$

tg@master:/software/spark⑴.6.1-bin-hadoop2.6$ spark-shell

log4j:WARN No appenders could be found for logger (org.apache.hadoop.metrics2.lib.MutableMetricsFactory).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

Using Spark's repl log4j profile: org/apache/spark/log4j-defaults-repl.properties

To adjust logging level use sc.setLogLevel("INFO")

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 1.6.1

/_/

Using Scala version 2.10.5 (Java HotSpot(TM) 64-Bit Server VM, Java 1.7.0_80)

Type in expressions to have them evaluated.

Type :help for more information.

Spark context available as sc.

16/05/31 02:17:47 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/05/31 02:17:49 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/05/31 02:18:02 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

16/05/31 02:18:03 WARN ObjectStore: Failed to get database default, returning NoSuchObjectException

16/05/31 02:18:10 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/05/31 02:18:11 WARN Connection: BoneCP specified but not present in CLASSPATH (or one of dependencies)

16/05/31 02:18:19 WARN ObjectStore: Version information not found in metastore. hive.metastore.schema.verification is not enabled so recording the schema version 1.2.0

16/05/31 02:18:19 WARN ObjectStore: Failed to get database default, returning NoSuchObjectException

SQL context available as sqlContext.

scala>

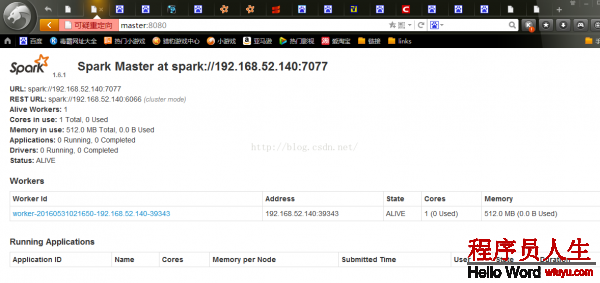

查看

查看job